Most of the servers today run on Linux, so as a developer, it's essential to have a basic understanding of how the operating system manages memory. Key concepts include:

- Address mapping

- Memory management

- Page fault handling

Let’s start with some fundamental knowledge. In a process, memory is typically divided into two main sections: user mode and kernel mode. The standard distribution looks like this:

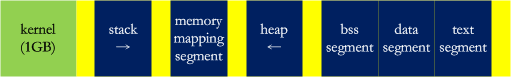

Switching from user mode to kernel mode usually happens through system calls or interrupts. User space memory is divided into different regions for various purposes:

The kernel space isn’t used randomly; it's structured in a specific way:

Now let’s dive deeper into how Linux handles memory.

Address Mapping

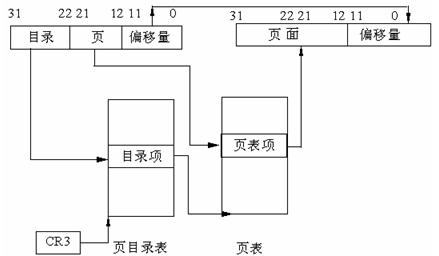

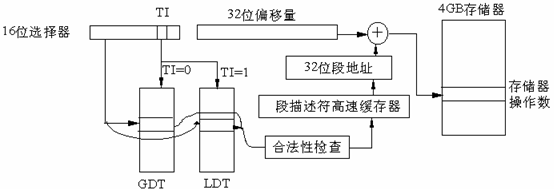

In Linux, the address mapping process goes from logical address → linear address → physical address. Physical addresses are straightforward — they represent actual memory locations. Linear and logical addresses define the translation rules, where linear addresses follow specific rules:

This is handled by the MMU (Memory Management Unit), which uses registers like CR0 and CR3. Logical addresses appear in machine instructions, and their structure is defined as follows:

In Linux, the logical address is essentially the same as the linear address, which simplifies things compared to other systems that complicate the process unnecessarily.

Memory Management

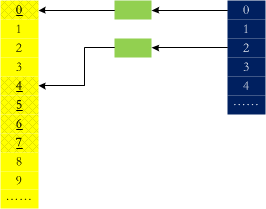

When the system boots, it detects the available memory and initializes a simple management system called bootmem, which functions like a bitmap. However, this approach isn't very efficient for large allocations. To improve performance, the buddy system was introduced. It maintains free blocks of sizes that are powers of two, making allocation and deallocation more efficient.

For example, if you need 3 pages, the system will allocate a block of 4 pages, use 3, and return the remaining one. Here's a visual representation:

If pages 1 and 2 are released, do they get merged? The code below shows how the buddy system works:

static inline unsigned long __find_buddy_index(unsigned long page_idx, unsigned int order)

{

return page_idx ^ (1 << order);

}

As shown, page 0 is paired with 1, and 2 with 3. Even though 1 and 2 are adjacent, they are not considered buddies. This helps prevent memory fragmentation, which can be a major issue in systems.

To check the number of free pages in each order, you can use the command `cat /proc/buddyinfo`.

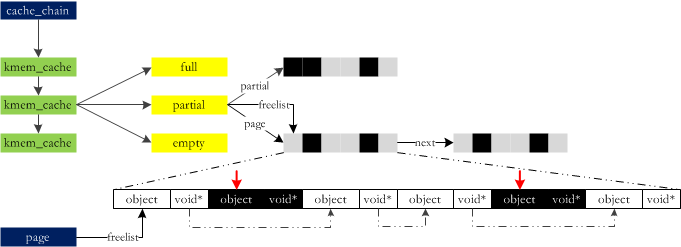

While the buddy system is effective for allocating memory in page-sized chunks (typically 4KB), many data structures in the kernel are smaller than that. Allocating full pages for small objects would be inefficient. That’s where slab allocation comes in.

Slab allocates memory in bulk from the buddy system and then distributes it as needed. However, as systems grew larger and more complex, especially with the rise of multi-core and NUMA architectures, the slab allocator began to show limitations, such as high overhead and complex queue management.

To address these issues, the slub allocator was developed. It reduces the overhead of slab management by modifying the page structure and providing each CPU with its own local cache (kmem_cache_cpu). For smaller embedded systems, there's also slob, a simplified slab-like allocator that performs better in those environments.

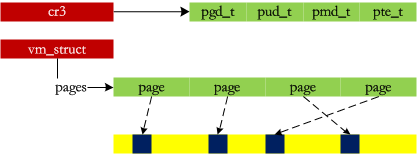

But even with these optimizations, managing large contiguous memory blocks remains a challenge. The buddy system may not always find a suitable block, even if enough memory exists but is fragmented. This is where vmalloc steps in. It allows the system to use scattered memory fragments to build a contiguous virtual address space, similar to assembling pieces into a complete product.

In addition to direct mapping, Linux provides the kmap function to assign a linear address to a page when needed.

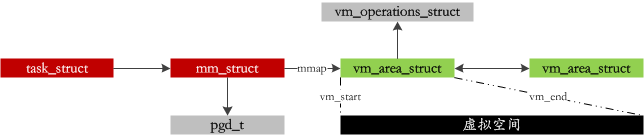

Each process has different segments, such as code, dynamic libraries, global variables, heap, and stack. Linux manages a virtual address space for each process:

When you call malloc(), it doesn’t immediately allocate physical memory. Instead, it reserves a virtual address range. Physical memory is only allocated when the program actually accesses the memory. This technique is known as Copy-on-Write (COW) and plays a crucial role in efficient memory usage.

Page Faults

Page faults occur when a process tries to access a virtual memory region that hasn’t been mapped to a physical page. When this happens, the processor raises an exception, and the kernel handles it. Important information during this process includes:

- CR2: The linear address that caused the fault

- Err_code: Indicates the cause of the exception

- Regs: The state of the CPU registers at the time of the fault

The page fault handling process involves several steps, including checking if the page is swapped out. If it is, the kernel swaps it back in from disk. You can monitor swap activity using commands like:

Additionally, if a file is memory-mapped (mmap), a page fault can occur during read or write operations. Understanding these mechanisms is crucial for optimizing application performance on Linux systems.

A substance that does not transimit electricity.

Line Post Insulator,Electrical Pole Insulator,Porcelain Insulators,Electrical Insulation Fiber Board

Jilin Nengxing Electrical Equipment Co. Ltd. , https://www.nengxingelectric.com