Recently, Dishashree Gupta published an article titled "The Architecture of Convolutional Neural Networks (CNNs) Demystified" on Analytics Vidhya. This insightful piece dives deep into the structure of CNNs, specifically those used for image recognition and classification. The author also included a detailed paper with comprehensive examples, aiming to provide a thorough understanding of how CNNs function.

Introduction

Honestly, for a while I struggled to grasp the concept of deep learning. I read through various research papers and articles, but everything seemed overly complex. I tried to understand neural networks and their variations, yet I still found it challenging.

Then, one day, I decided to take a step-by-step approach and start from the basics. I broke down each technical process and manually performed the steps and calculations until I truly understood how they worked. It was time-consuming and stressful, but the results were incredible.

Now, not only do I have a solid understanding of deep learning, but I also have clear insights based on that foundation. It's one thing to apply a neural network without understanding it, and another to truly comprehend its inner workings.

Today, I want to share my journey with you—how I started with convolutional neural networks, went through the process, and eventually figured it all out. I’ll walk you through a detailed explanation so you can gain a deeper understanding of how CNNs work.

In this article, I will explore the architecture behind CNNs, which were originally developed to solve image recognition and classification problems. I'll assume you have a basic understanding of neural networks.

1. How Does the Machine See an Image?

The human brain is an incredibly powerful machine capable of capturing and processing multiple images per second without even realizing it. However, machines don’t work that way. The first step in image processing for a machine is to understand how to represent and read an image.

In simple terms, every image is made up of a sequence of ordered pixels. Changing the order or color of these pixels alters the image. For example, consider an image that contains the number 4.

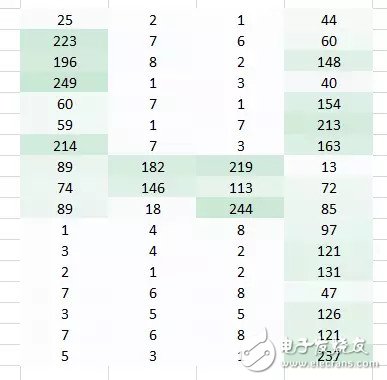

Essentially, the machine breaks the image into a matrix of pixel values, where each value represents the color at a specific position. In the example below, 1 represents white, and 256 represents the darkest green (for simplicity, we’re focusing on one color).

Once the image is stored in this format, the next step is to help the neural network understand the patterns and structures within the data.

2. How Can We Help the Neural Network Recognize Images?

The pixel values are arranged in a specific order.

Suppose we try to identify images using a fully connected network. What would be the process?

A fully connected network would flatten the image, treating it as a linear array of pixel values. These values would then be considered features for the network to predict the image. However, this approach makes it very difficult for the network to interpret what’s actually happening in the image.

Even humans find it hard to recognize the number 4 in the image above, because we lose the spatial arrangement of the pixels.

So, what can we do? We can try to extract meaningful features from the original image while preserving its spatial structure.

Case 1

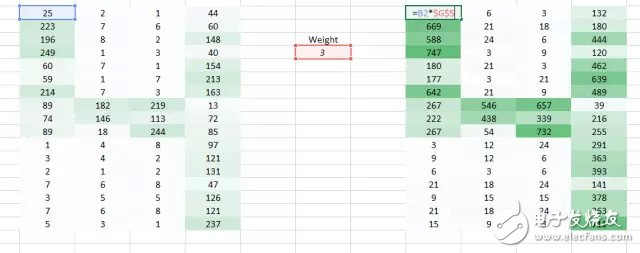

Here, we apply weights to the original pixel values.

Now, it's easier to see that this is the number "4." But before feeding it into a fully connected network, we need to flatten it, which may disrupt the spatial relationships.

Case 2

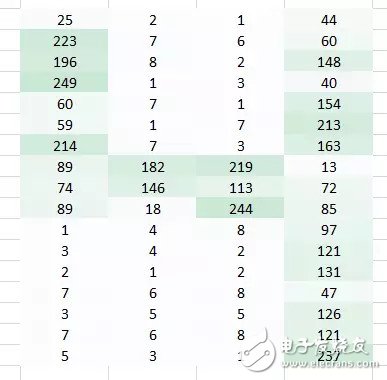

If we flatten the image completely, we lose the spatial structure entirely. We need a way to feed the image to the network without flattening it, while maintaining the 2D or 3D arrangement of pixel values.

One approach is to take two pixel values at a time instead of one. This allows the network to better capture the relationship between neighboring pixels. Since we're using two pixels at once, we also use two weight values.

You can now see that the image has changed from four columns to three. This is because we are moving two pixels at a time, and the image becomes smaller as a result. Despite the reduction in size, we can still recognize that it's the number "4." Also, note that we are only considering horizontal alignment by using two consecutive pixels.

This is one method of feature extraction from images. We can clearly see the left and middle parts, but the right side appears less defined. This is mainly due to two issues:

1. The corners of the image are processed with a single weight multiplication.

2. The left side remains visible because of a higher weight, while the right side is slightly obscured due to a lower weight.

Now that we've identified these two issues, we need to address them with two corresponding solutions.

Case 3

Electromagnetic Air Pumps,Magnetic Air Pumps,Air Pump Aquarium,Magnet Air Pump

Sensen Group Co., Ltd.  , https://www.sunsunglobal.com