When it comes to gesture recognition, most people may associate Leap Motion and Kinect image sensing based somatosensory devices. But if you have experienced these devices, you should know that there is a flaw behind them - users must aim at the camera to complete the "standard" action, but also have very harsh requirements on the surrounding environment, which to a certain extent User experience.

In fact, gesture recognition is not only the above-mentioned product form, and another gesture recognition system based on wearable devices has gradually entered the public view.

Integrating the gesture recognition system into the watch or wristband, the user only needs to perform an action to achieve human-computer interaction. It can be applied not only to somatosensory games but also to various home appliances and computers. This is a kind of pole. Imaginative products.

From the point of view of existing products that are already on the market or are still in the lab stage, there are many technical solutions for wearable gesture recognition. Lei Fengnet's open class group friend, Zhang Yinlai, general manager of Guangzhou Zhiqu Technology, told Lei Fengwang (search for “Lei Feng Net†public number) that there are generally three methods for wearable gesture recognition: EMG, light perception (IR) and pressure.

What are their characteristics?

EMGEMG is one of the most popular among wearable gesture recognition programs.

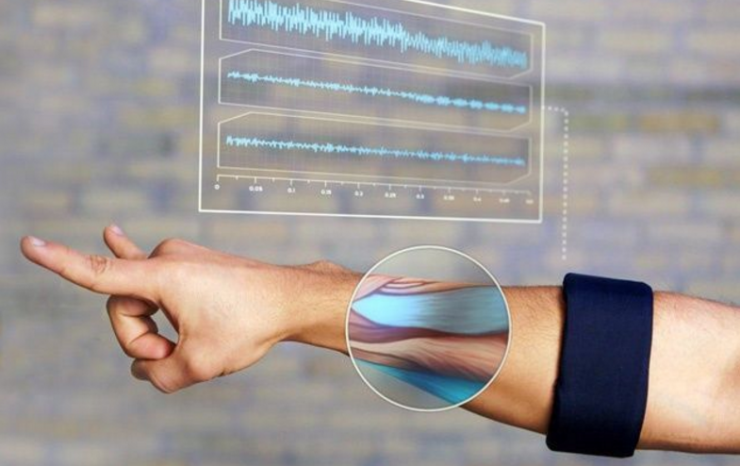

Because different gestures are applied to different arm muscle tissues, the moving biomuscular tissue produces a faint (low to millivolt) potential change, and this surface electromyographic signal (sEMG) can be acquired by the sensor (signal acquisition). . Therefore, if several different gestures are pre-defined on the wearable device, various gestures (pattern judgments) can be identified according to the arm muscle current signals, and finally the various gestures are parsed into different machine commands by a specific algorithm and implemented. Control of the equipment.

In addition to the myoelectric sensors that recognize electrical signals, this approach also deploys multiple multi-axis sensors to more accurately recognize multiple gestures. The more sensors are added, the higher the recognition accuracy, but the power consumption and calculation difficulty will increase accordingly.

For example, the MYO launched by Thalmic Lab is such a hand gesture recognition device. It has 8 built-in myoelectric sensors and 9-axis inertial measurement units (including gyroscopes, accelerometers, and magnetometers) to capture the muscle electrical signals of these muscles. Perform pattern recognition to determine what gestures you are doing.

Because the information collected and identified is almost entirely from the human body, the myoelectric program is minimally affected by the environment (such as light and operating distance), and the algorithm requirements are also minimal.

However, this scheme also has its shortcomings. Zhang Yinlai told Lei Fengwang that “myoelectricity is very sensitive to humidity and it is not suitable for use in underwater and humid environments. It will affect the experience after sweating.â€

InfraredInfrared cameras have become an important role in identifying object depth information, both Leap Motion and Kinect.

What are the characteristics of wearable infrared solutions?

If you talk about technical principles, in fact, there is not much difference between the two product types. They all use image recognition to realize human-computer interaction. However, the components of wearable infrared solutions are simpler.

With built-in sensors and infrared sensors, the device can detect the contours of the user's wrist to identify movements, such as the MIT startup team Amiigo.

Through infrared sensors, this device can also add some extended functions, such as the detection of the user's heartbeat and blood oxygen content and other physiological data, can solve some of the bottlenecks in the medical field.

Although all of them use image recognition methods, single wearable infrared gesture recognition has advantages over devices such as Kinect. Because the device is located close to the object to be identified, it is less affected by light than Kinect and other devices. .

From another point of view, it is precisely because this program is based on image recognition to identify the action, resulting in the device to deal with a larger amount of information than the myoelectric program, so it has high requirements for the algorithm processing, and There may be differences in performance among people with different skin colors or tattoos, so the accuracy is generally good.

Zhang Yin told Lei Feng network.

pressureIf you understand the principle of EMG's implementation, it is not difficult to understand the stress plan. They have some similarities, but the latter is the use of pressure changes to detect the status of the tendon, that is, the hardware part of the sensor replace the myoelectric pressure sensor (usually configure multiple pressure sensors to accurately read out The value of different gestures); In addition, the algorithm changes the calculated electrical signal to pressure information.

Of course, this is also the most difficult solution for productization.

This kind of pressure scheme is very difficult to achieve, because each person's characteristics are too different, shake and shake will produce pressure changes, if there is no strong algorithm support, it is basically difficult to achieve product.

Another group of ingenious open class so said.

to sum up If viewed from the three dimensions of power consumption, environmental sensitivity and recognition difficulty, the above three options have their own advantages and disadvantages:

Power Consumption: Infrared> Myoelectric> Stress, Environmental Sensitivity: Infrared> Myoelectric> Pressure, Recognition Difficulty: Pressure> Infrared> Myoelectricity.

However, from the perspective of existing products, there is still much room for improvement in their experience. Because the technology is not mature enough, only a small number of entrepreneurs in this kind of wearable gesture recognition products, there are few to achieve mass production.

The threshold for wearable gesture recognition lies in the algorithm of information collection and pattern recognition. The connection part only needs to be implemented within the device by adding a BLE module. The technical difficulty may not seem high, but it must be ensured that the user experience is not. Zhang Yinlai thinks:

Now that the wearable interaction is still in the exploration stage, everything from speech to gestures is to move closer to the natural human interaction, language, and take. However, due to the current technological constraints (energy consumption, computing power, etc.), only some compromises may be adopted temporarily, but if in the future, there is a huge breakthrough in chip technology, materials technology, and battery technology. Will also be resolved. At that time, a processor strong enough to handle physical quantities such as optics, mechanics, and bioelectricity at the same time can have enough good material to wear these devices without a sense of urgency. There is enough energy to support several months of battery life. The wearable is not a simple physical quantity analysis, but a multi-sensor comprehensive analysis to give the user the most reasonable experience.

Although there is still a long way to go, if users control all kinds of equipment, they will be more at will. Isn't this something worth looking forward to?

AI, VR, drones, driverless, etc. The hottest topics nowadays, Lei Feng net hard to open classes everything, so stay tuned! (Previous content http://)