Lei Feng network (search "Lei Feng network" public concern) : Author Adriana Hamacher, source robohub, compiled by Lei Feng network exclusive, refused to reprint without permission!

A new study shows that helper robots who can express themselves and communicate with others can win people’s trust even more than can cooperate with humans, even if they make mistakes. In addition, in the process of using humanoid robots, there is a negative effect - in order not to hurt the feelings of robots, users may lie to them.

These are the main findings of my research when I took a master's degree in human-computer interaction at UCL. The purpose of this study is to design a robot assistant that humans can trust. At the end of this month, the author will publish this research result at the IEEE International Symposium on Interactive Communication between Robots and Humans (RO-MAN).

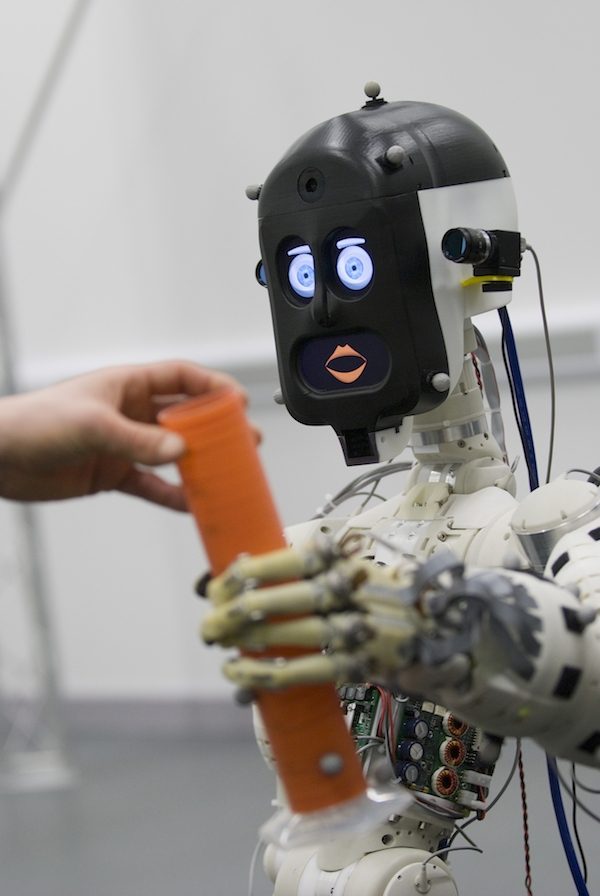

There are two mentors in the author, one is UCL's Professor Nadia Berthouze and the other is Professor Kerstin Eder from Bristol University. Under their guidance, the author designed a humanoid assisted robot experiment. During the experiment, the robot can help the user to distribute the egg cake. Specifically, it can deliver eggs (made of plastic), salt and oil, and then remediate after breaking an egg. The purpose of the experiment is to see how the robot, after making a mistake, communicates effectively with the user about the mistakes he made, and then regains the user's trust.

The results of the experiment were surprising. Most robots prefer robots that can communicate with people, even though they are more efficient and less errant robots, even though they have to complete tasks for 50% of the time. The users responded well to the apologies of the robots who could communicate, especially when they showed a sad look—which means they knew they had made a mistake.

At the end of the experiment, the author's programming is that the robot asks the user whether to make it a kitchen assistant, and the user can only respond to "will" or "not" and cannot explain why he answers like this. Many users are not happy to answer this question, and some people are very cramped. One user recalled that when he answered "no", the robot looked very sad - although not programmed in this way. Some people say that this is emotional scam, and some people even lie to the robot.

People's reactions in one way or another show that after seeing the human-like sadness that robots have shown after breaking eggs, many users can already foresee the expression on the robot's face after they answer “noâ€â€”so they hesitate. Indecisive, unwilling to say "no"; they even worry that the robot will not be as painful as humans.

This study highlights the robotic anthropomorphic factors including regret, which can effectively eliminate dissatisfaction, but we must carefully identify the features we want to pay special attention to and reproduce. If there is no basic principle, we may design robots with different personalities.

As the leader of the Robot Security Testing Research Project at the Bristol University Robotics Laboratory, Prof. Kerstin Eder once said, "I believe our robot friends are the basis for successful human-computer interaction. This research has made us right with robots. A deeper understanding of communication and emotional expression allowed us to mitigate the possible effects of unpredictable behaviors of cooperative robots. After repeated examination and confirmation of these human factors, engineers designed robot assistants to be worthy of people. trusted."

This author's research is integrated with the Project Trusted Robot Assistant project funded by the British Engineering and Natural Sciences Research Council. The "Certified Robot Assistant" project uses a new generation of inspection and validation technology to confirm the safety and reliability of the robot - this will greatly improve our quality of life in the future.

Via robohub