Lei Feng network (search "Lei Feng network" public concern) : Author Ryan O'Hare, source Dailymail, compiled by Lei Feng network exclusive, refused to reprint without permission!

Undoubtedly, unconscious gender biases affect the computer algorithms used to analyze language. For example, when the employer enters the “programmer†position while searching for resumes, the search results will show priority for resumes from male job seekers—because the word “programmer†is more closely related to men than women. Similarly, when the search target is "front desk", the resume of the female job applicant will be displayed first. Such sexist computer algorithms are unfair to job seekers.

Currently, Adam Kalai, a programmer from Microsoft, is working with Boston University scientists to try to break down the gender discrimination in human society reflected in computer algorithms through a technique called "word vector." The basis of the "word vector" technique is to teach computers to perform language processing by exploring the associations between words. By using this method, the computer can understand the context of the context by comparing the words "she" and "he." In the specific application, the computer will find suitable matches such as "sister-brother" and "queen-king".

Kalai said in an interview with the National Public Radio of the United States, “We are trying to avoid reflecting gender-related content in the news... but you will find that these word-pairings have naked gender discrimination.â€

In a newly published research report on the Web, the research team found that they can train computers to ignore certain specific associations of words while retaining the key information they need. They explained: "Our goal is to reduce the prejudices in word matching, while retaining some of the useful features in the word vector." By slightly adjusting the algorithm, they can eliminate the connection between the "front desk" and "female." At the same time, keep the appropriate word pairings, such as "Queen" and "female."

Although the algorithm generates pairings based on gender, it still ignores some of the potential links - the gender characteristics of some words are indeed more distinct. They believe that this method will allow computers to learn through word vectors, while preserving effective connections, while escaping from inherent personality biases. According to reports from the National Public Radio in the United States, the problem is that it is not necessary to use "word vectors" to handle languages. This algorithm can distinguish between gender and ethnicity, but it may only be necessary if the researcher wants to focus on a specific gender or ethnic group.

When this technique is used as a method of screening data without prejudice, regardless of gender or racial prejudice, things will be more complicated. In response, scientists explained: “The understanding of direct and indirect prejudices based on the inherent stereotypes of race, ethnicity, and culture is rather subtle. In the future, an important direction of this work is to be able to quantify and eliminate these bias."

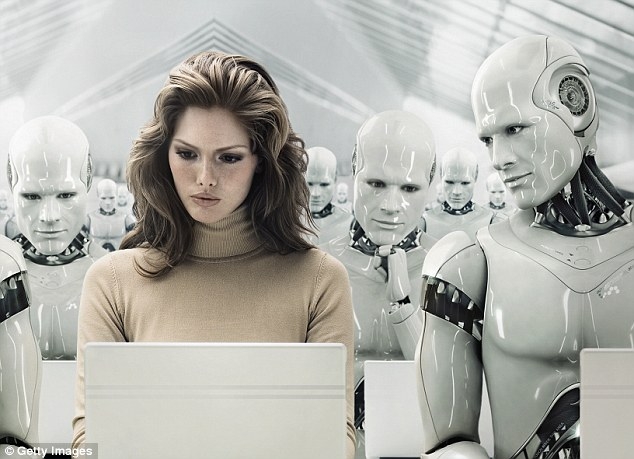

Of course, it is impossible to completely eliminate prejudice. The matching rules are in the hands of human beings. As long as human prejudice still exists, the prejudice of robots will not disappear.

Via dailymail